Serving LLMs from Raspberry Pi using Ollama and Cloudflare Tunnel

Provisioning the compute resource for k8s setup in AWS using Pulumi

In this article, we will look at how to run LLMs like Gemma and Llama 2 using Ollama on the Raspberry Pi and using Cloudflare tunnels for public internet access.

-

Gemma and Llama2 are the open-source LLMs released by Google and Facebook.

-

Ollama is an open-source project that makes it easier to create and run a LLM model locally.

-

Raspberry Pi is a single-board computer powered by ARM processors.

-

Open WebUI provides a user-friendly Web UI for LLMs.

-

Cloudflare tunnels connect LLMs running locally on Raspberry Pi to the internet.

Installing Ollama and Open Web UI

Using the following docker-compose file to install the Ollama and Open Web UI as containers on the Raspberry Pi.

Ansible scripts are used to install prerequisites setup and above docker containers on the Raspberry Pi.

- name: Install Ollama in Raspberry Pi

become: true

block:

- name: Git checkout Open webui (ollama webui)

ansible.builtin.git:

repo: "https://github.com/open-webui/open-webui.git"

dest: "/home/{{ user }}/open-webui"

version: "{{ open_webui.git_tag }}"

- name: Copy docker compose file

ansible.builtin.template:

mode: u=rwx,g=rx,o=rx

src: "{{ playbook_dir }}/roles/ollama/templates/docker-compose.yml.j2"

dest: "/home/{{ user }}/open-webui/docker-compose.yml"

- name: Run Docker Compose

community.docker.docker_compose:

debug: true

project_src: "/home/{{ user }}/open-webui"

files:

- docker-compose.yml

state: present

Once the Ansible script is executed successfully, Open Web UI can be accessed at ip_addr_of_raspberrypi:3000 . Login into the application as an Admin and head onto the Settings > Model page to download the Gemma and Lama 2 models.

Cloudflare tunnel setup

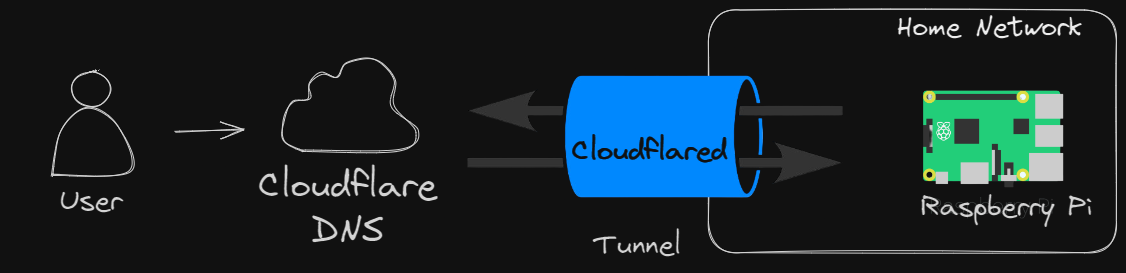

Cloudflare tunnels allow you to securely connect Raspberry Pi to the internet.

i. Install and configure Cloudflared

# Download the package

curl -L https://github.com/cloudflare/cloudflared/releases/download/2024.2.1/cloudflared-linux-arm64.deb

# Install the package

sudo dpkg -i cloudflared.deb

# Authenticate cloudflared setup

cloudflared tunnel login

# Create Cloudflare tunnel

cloudflared tunnel create ollama-ai

ii. Create a configuration file

url: http://localhost:3000

tunnel: <Tunnel-UUID>

credentials-file: /root/.cloudflared/<Tunnel-UUID>.json

iii. Start routing traffic

# Routing the traffic

cloudflared tunnel route dns ollama-ai ai.madhan.app

# Run the tunnel

cloudflared tunnel run ollama-ai

When the Cloudflare tunnel setup is completed successfully Gemma and Lama2 models can be accessed using Open Web UI at ai.madhan.app (the site is no longer operational)

Originally published on Medium

🌟 🌟 🌟 The source code for this blog post can be found here 🌟🌟🌟

References:

[2] https://docs.openwebui.com/

[3] https://developers.cloudflare.com/cloudflare-one/connections/connect-networks/